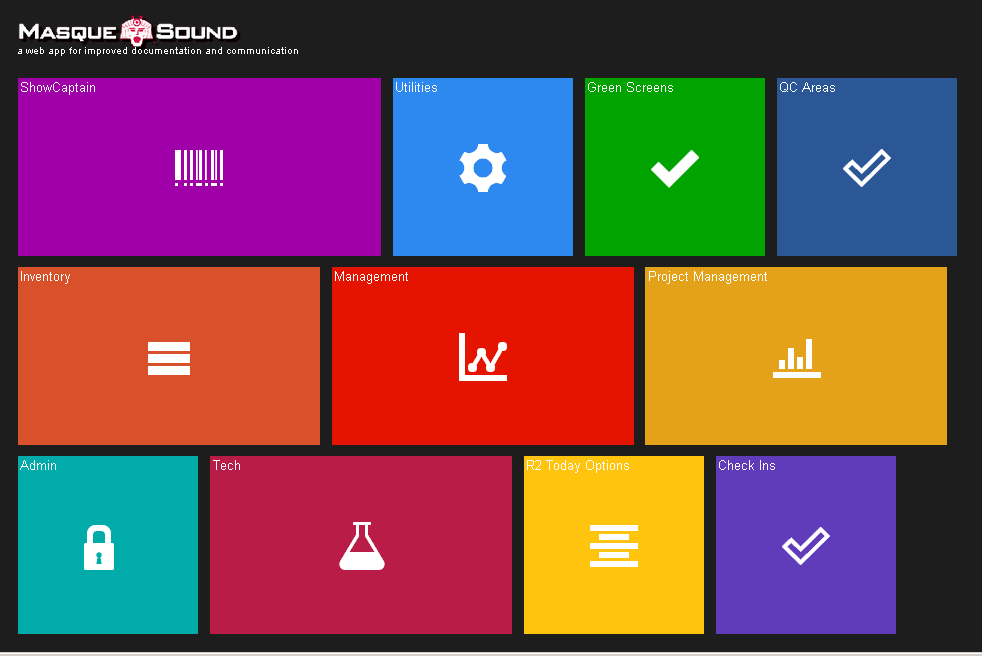

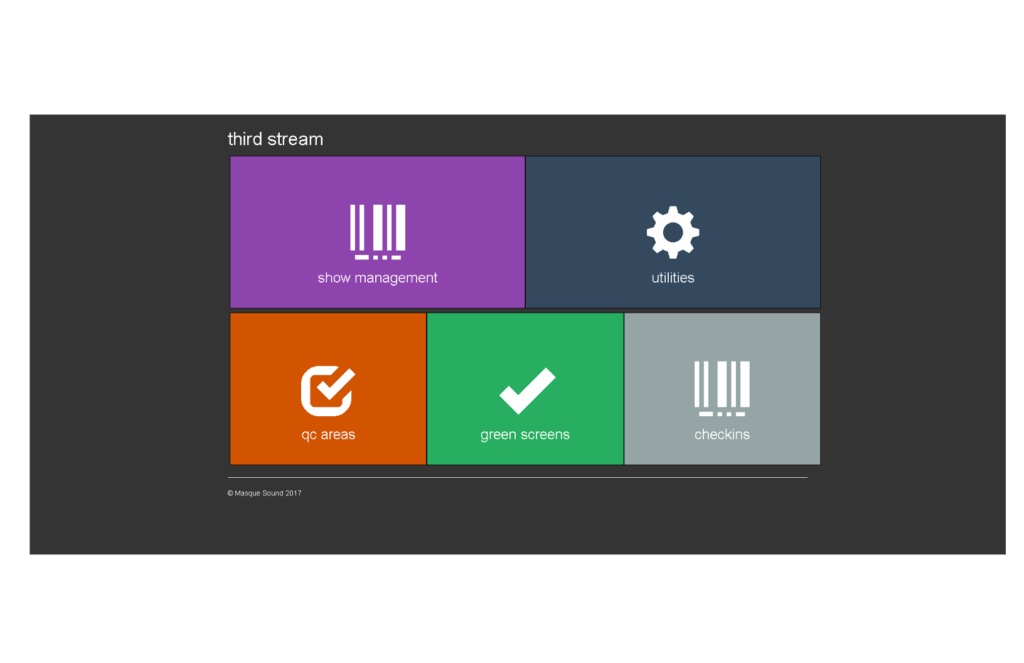

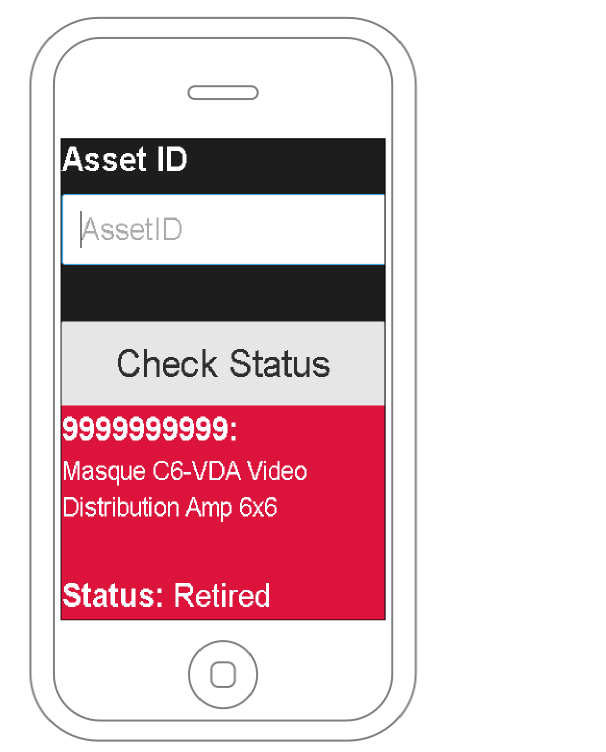

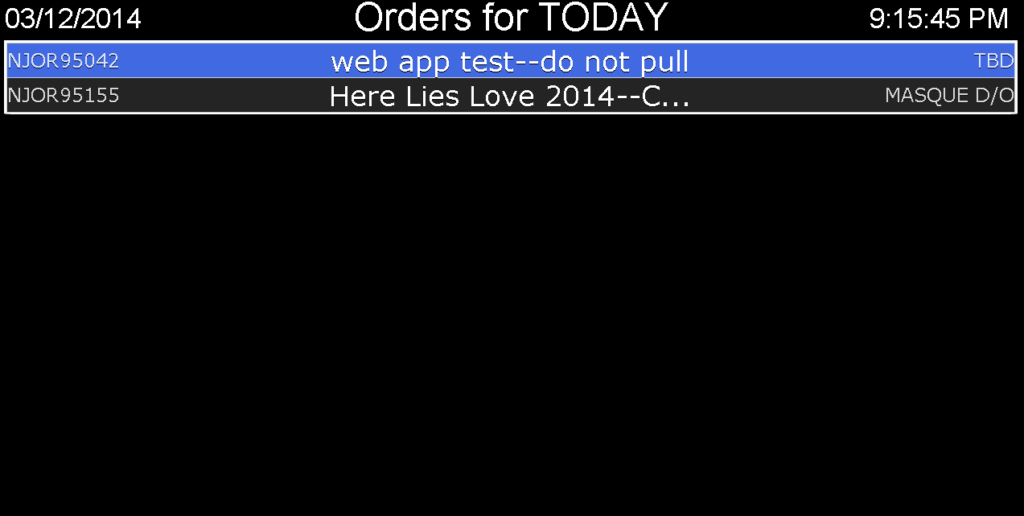

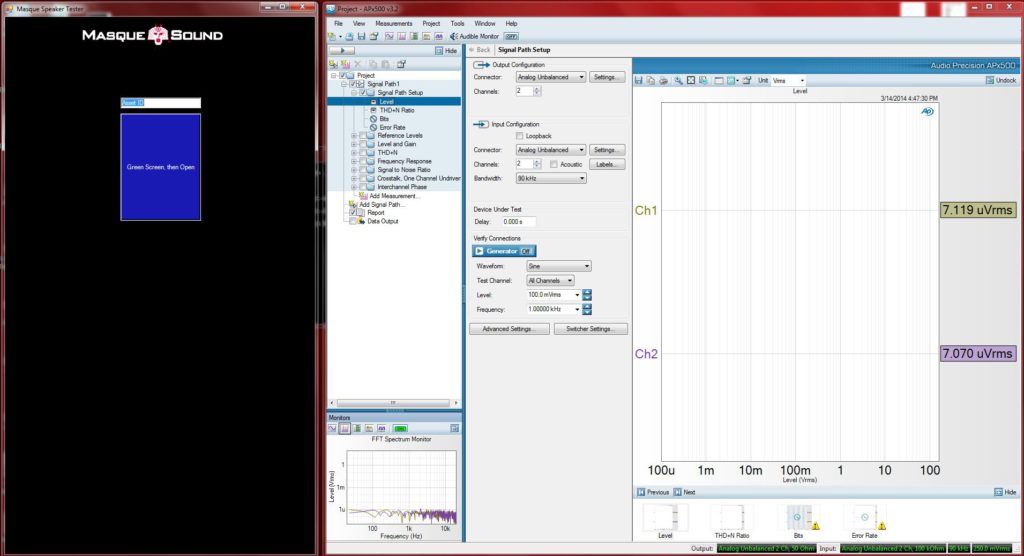

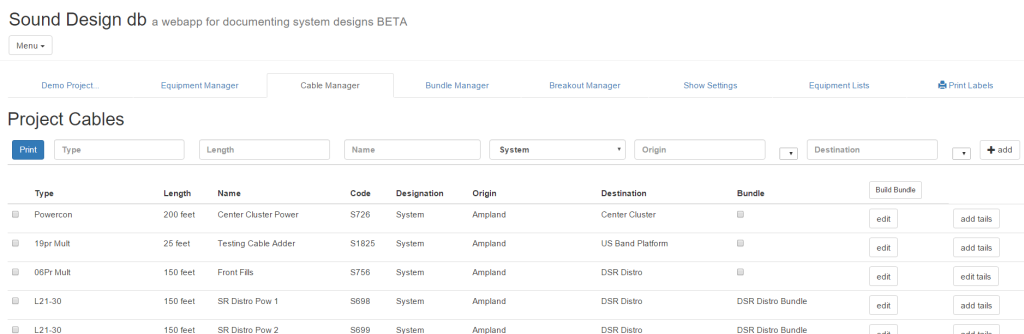

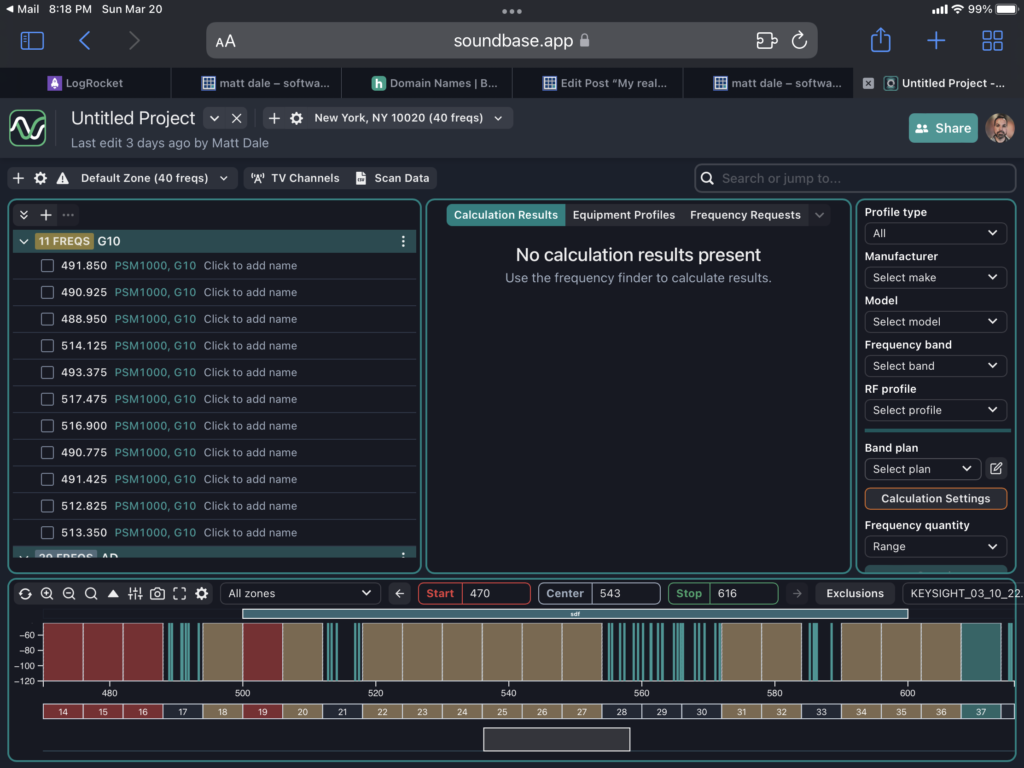

What started out to be a simple app for documenting device configuration and tracking devices on show-site in a live production environment turned into a set of very complex requirements. Here are some of the requirements:

- Multi-project file system (like a Google Docs system)

- Multi-user project collaboration

- Multi-level permission levels

- Multi-level permissions per app, per area of the app, per project

- Real-time updates for all users

- Very performant data-grid with row and column drag-and-drop

- Custom data-grid cells with typeahead, selectors, radio groups etc.

- A chat system for user-to-user DMs and project collaborators (user groups)

- Project export in many formats (CSV, Excel, PDF)

- A PDF label printer per row

- A status history tracker per row

- All the niceties we have come to expect from Google Docs:

- Project open and edit history

- Per document, per field edit history

- Undo/Redo

- User presence per project with idle tracking

The goal of this product was to create an ecosystem that helps technicians work more efficiently from show to show, and within one framework. The standard tool currently used is Google Docs/Sheets, so persuading techs to move from such a monolith would require a really well designed product.

Picking the frontend tech

Since my experience with the newer JS frameworks was limited, React was a good choice for this project. Considering the internet is full of resources teaching React, it was simple to get started.

We quickly found that the speed with which React has changed over the years was providing us with outdated tutorials or conflicting concepts (class based components vs. functions and hooks). I wouldn’t change this tech selection since there are so many resources available, but it has been a little difficult at times to be certain that I made the correct choice.

We decided on a front-end UI library that was well established, and had a good set of features that we would require. We are on the third iteration of this project, with the first two using the BlueprintJS component library, and the third using the Chakra-UI library.

The Chakra-UI library has enabled us to move VERY quickly. We developed a simple style guide that allows us to iterate on UIs without getting stuck on CSS and layout.

Picking the Backend (and Frontend) Tech

My original plan was to use Django as the backend single source of truth using a Postgres database with a Django Rest Framework system over it. I naively built a custom architecture for the real-time aspect of the system, with Google Firestone maintaining a diff per user per database table. This system worked well for the first few events we used it on, but the edge cases made it very difficult to maintain. Also, maintaining this system at scale would have been tough.

After the second show using the app, we realized that the architecture needed to change. At this time, I heard Filipe from MeteorJS on the Syntax podcast and tried Meteor JS. After the first two days using it, I realized that a rebuild of our app in Meteor would be more than worth the effort in the long and short terms.

Meteor provides all the real-time aspects that we required, plus a good amount of the “batteries included” features that Django provides (like a user system). Meteor is stable, as it has been around since 2016 and it works very well with React.

Using Meteor, we didn’t need separate systems for Front and Backends, as Meteor serves both very nicely. Buying in to the Meteor ecosystem has allowed us to build features extremely fast rather than getting stuck in the weeds with architecture. Meteor has already figured out all the tough edge cases of real-time data and their DDP/Method system allows us to pick which sets of data will be real-time enabled, and which data will be fetched on demand.

With this system in place, we will be able to take the product to the next level and provide technicians with a web based product that is robust, stable and feature rich.